Applies To: OfficeDev PnP, SharePoint, PowerShell

The SharePoint PnP Remote Provisioning engine is awesome. With just a couple of lines of code or some quick PowerShell you can have a deployable “template” for your SharePoint site (on-premises or O365). OfficeDev PnP offers much more, but it’s the provisioning aspect of things we’re going to talk about today.

Specifically, we’re going to talk about extending the process through the new ITemplateProviderExtension interface. In the August 2016 release the PnP team released the ability to create your own provider extensions and incorporate them directly in the retrieval and application of your PnP templates (Read the announcement here, see an example here).

These new extensions allow you to stick your custom logic directly into the generation of templates and the application of templates. This allows you to apply special tweaks, adjust output, generate additional objects/calls, etc. There are 4 entry points (see the interface below) that give you a lot of flexibility.

The Project

An extension is just a class that implements the ITemplateProviderExtension (more about this in a bit). If you are interfacing with the provisioning engine using .NET directly then you can just add the class to your project. More likely, however, you’ll want to add it as a Class Library (this is true for calling it through PowerShell as well).

In Visual Studio, add a new project of type Class Library (File > New > Project and select Class Library from the list of templates, give it a name, and click OK).

You’ll need to add the SharePoint PnP Core library NuGet package to your project. Right-Click on your project in Solution Explorer and choose Manage NuGet Packages… In the NuGet Package Manager click on Online in the left pane and type PnP into the Search Online box in the upper-right. From the results pick the SharePoint PnP Core library that matches your targeted version and click Install (I’m using SharePoint PnP Core library for SharePoint 2013 since I am targeting On-Premises SharePoint 2013):

This will take just a minute or so to copy everything into your project. You’ll probably be promoted to accept some licenses (just click accept). Once this is done, you can click Close.

The Interface

In your project you have a few files like Class1.cs, SharePointContext.cs and TokenHelper.cs. You can leave all of these (they won’t hurt anything). Right-click on Class1.cs and choose Rename. Enter the name of your extension. Visual Studio will also prompt you to rename the references for Class1 – Click Yes.

To implement the interface, you’ll want to slap a using statement up on top of your extension class for OfficeDevPnP.Core.Framework.Provisioning.Providers then implement the ITemplateProviderExtension like this:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using OfficeDevPnP.Core.Framework.Provisioning.Providers;

namespace MyExtension

{

public class CommentIt : ITemplateProviderExtension

{

}

}

Right-Click on ITemplateProviderExtension and select Implement Interface > Implement Interface to have the class stubbed out for you:

So what the heck is all this? Let’s go through the methods and talk about what you’re going to want to use.

Entry Points

Your template provider extension can intercept the template at 4 different entry points and then do whatever it is you want to do. I find the name of the entry points a little difficult to follow, but here’s where they’re called within the life cycle of the template:

- From SharePoint to Template (Get-SPOProvisioningTemplate)

- Template object generated from SharePoint

- Template serialized into XML

- Template saved to file system

- From Template to SharePoint (Apply-SPOProvisioningTemplate)

- Template loaded from file system as XML

- Template deserialized from XML to Template object

- Template object applied to SharePoint

And here’s a reference chart:

|

Template Is |

| Action |

Template Object |

XML Stream |

| From SP to Template (Save) |

PreProcessSaveTemplate |

PostProcessSaveTemplate |

| Applying Template (Apply) |

PostProcessGetTemplate |

PreProcessGetTemplate |

Supports Properties

The Supports properties indicate to the provisioning engine which entry points your extension supports (where you want to inject your logic). You’ll need to edit each of these to remove the NotImplementedException and to return true when you want to inject during that point and false when you don’t.

For my extension, I just want to tweak the XML when someone is saving the template from SharePoint so here’s what mine look like:

public bool SupportsGetTemplatePostProcessing

{

get { return (false); }

}

public bool SupportsGetTemplatePreProcessing

{

get { return (false); }

}

public bool SupportsSaveTemplatePostProcessing

{

get { return (true); }

}

public bool SupportsSaveTemplatePreProcessing

{

get { return (false); }

}

Initialize

The Initialize method is where you can pass any settings and do any setup. For my extension, I am just passing a string that I will inserting into the template XML:

private string _comment;

public void Initialize(object settings)

{

_comment = settings as string;

}

Processing Methods

You only need to implement the methods where you indicated you were supporting them in the Supports properties. You can leave the rest with the default NotImplementedException in place.

For this example, I just want to tweak the XML when someone is saving the template from SharePoint so I returned true for the SupportsSaveTemplatePostProcessing property which means I need to implement the PostProcessSaveTemplate method. For what I’m doing, you’ll need a few more using statements:

using System.IO;

using System.Xml;

using OfficeDevPnP.Core.Framework.Provisioning.Providers.Xml;

Here’s are my methods:

public OfficeDevPnP.Core.Framework.Provisioning.Model.ProvisioningTemplate PostProcessGetTemplate(OfficeDevPnP.Core.Framework.Provisioning.Model.ProvisioningTemplate template)

{

throw new NotImplementedException();

}

public System.IO.Stream PostProcessSaveTemplate(System.IO.Stream stream)

{

MemoryStream result = new MemoryStream();

//Load up the Template Stream to an XmlDocument so that we can manipulate it directly

XmlDocument doc = new XmlDocument();

doc.Load(stream);

XmlNamespaceManager nspMgr = new XmlNamespaceManager(doc.NameTable);

nspMgr.AddNamespace("pnp", XMLConstants.PROVISIONING_SCHEMA_NAMESPACE_2016_05);

XmlNode root = doc.SelectSingleNode("//pnp:Provisioning", nspMgr);

XmlNode commentNode = doc.CreateComment(_comment);

root.PrependChild(commentNode);

//Put it back into stream form for other provider extensions to have a go and to finish processing

doc.Save(result);

result.Position = 0;

return (result);

}

public System.IO.Stream PreProcessGetTemplate(System.IO.Stream stream)

{

throw new NotImplementedException();

}

public OfficeDevPnP.Core.Framework.Provisioning.Model.ProvisioningTemplate PreProcessSaveTemplate(OfficeDevPnP.Core.Framework.Provisioning.Model.ProvisioningTemplate template)

{

throw new NotImplementedException();

}

This is a pretty silly example, but here’s what the code above is doing in the PostProcessSaveTemplate method:

- Line 28, The method expects us to return the transformed XML steam when we’re done making our tweaks, so just getting it ready

- Lines 31-34, We can use the native XmlDocument objects to interact with the XML Stream. We just load it into a document and account for the pnp namespace.

- Line 36, We find the root node of the XML Template using xpath and the namespace

- Line 37, We generate a new XML Comment using the string passed into our Initialize method

- Line 38, We jam the comment into the root node so it shows up right at the top

- Lines 41-44, We save the modified XmlDocument to our result stream, reset it, then pass it along its way

Using Your Extension

Great, so now we’ve got an extension! How do we use this thing? In .NET it’s as simple as initializing our extension class and passing it into the XMLTemplateProvider’s SaveAs method (see the announcement for an example).

In PowerShell, we can write a script to load the extension from our dll and provide it in the TemplateProviderExtensions argument to the Get-SPOProvisioningTemplate or Apply-SPOProvisioningTemplate cmdlets.

Here’s an example of a PowerShell script that uses my custom CommentIt extension (Be sure to heck your dll location):

[CmdletBinding()]

param

(

[Parameter(Mandatory = $true, HelpMessage="Enter the URL of the target site, e.g. 'https://intranet.mydomain.com/sites/targetSite'")]

[String]

$TargetSiteUrl,

[Parameter(Mandatory = $false, HelpMessage="Enter the filepath for the template, e.q. Folder\File.xml or Folder\File.pnp")]

[String]

$FilePath,

[Parameter(Mandatory = $true, HelpMessage="Enter the comment to add!")]

[String]

$TemplateComment,

[Parameter(Mandatory = $false, HelpMessage="Optional administration credentials")]

[PSCredential]

$Credentials

)

if(!$FilePath)

{

$FilePath = "Extractions\site.xml"

}

if($Credentials -eq $null)

{

$Credentials = Get-Credential -Message "Enter Admin Credentials"

}

Write-Host -ForegroundColor Yellow "Target Site URL: $targetSiteUrl"

try

{

Connect-SPOnline $TargetSiteUrl -Credentials $Credentials -ErrorAction Stop

[System.Reflection.Assembly]::LoadFrom("MyExtension\bin\Debug\MyExtension.dll") | Out-Null

$commentIt = New-Object MyExtension.CommentIt

$commentIt.Initialize($TemplateComment)

Get-SPOProvisioningTemplate -Out $FilePath -Handlers Lists,Fields,ContentTypes,CustomActions -TemplateProviderExtensions $commentIt

Disconnect-SPOnline

}

catch

{

Write-Host -ForegroundColor Red "Exception occurred!"

Write-Host -ForegroundColor Red "Exception Type: $($_.Exception.GetType().FullName)"

Write-Host -ForegroundColor Red "Exception Message: $($_.Exception.Message)"

}

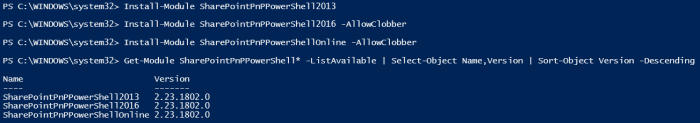

Please note, that you’ll need the PnP PowerShell Cmdlets installed for this to work. Instructions can be found here. I am using the 2013 On-Premise version but this script will work with whatever version you’re using.

Here’s what’s happening in this script:

- Lines 1-29, Just setting up the parameters for the script. Nothing too special here

- Line 31, Always nice to remind the user of important details

- Line 35, Connect to SharePoint with a single line – wowee!

- Line 37, Load up your dll from the file system (You can provide a full or relative path here). The pipe to Out-Null just keeps us from printing dll information to the console which would be strange to an end user

- Line 38, Get your extension class as an object using the namespace from your dll

- Line 39, Call the Initialize method of the extension. In this case we are passing in the comment received as a parameter to the script

- Line 41, This is a standard call to Get-SPOProvisioningTemplate with the exception that we are specifying our custom extension in the TemplateProviderExtensions parameter

- Line 43, Close up that connection

If we take a look at the XML file generated by our template (With a TemplateComment parameter of Look at this sweet comment!), we can see:

Aw yeah, boyo!

Debugging Your Extension

Generally, you’re going to be doing something more complicated than that and you’ll probably want to debug the thing. If you are calling your extension in .NET within Visual Studio then things are pretty much as you’d expect – Set your breakpoints and run the thing. PowerShell is a little less obvious.

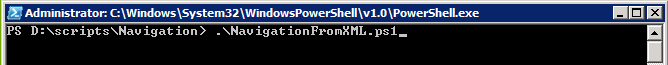

To debug your extension in the script above, you just need to see your breakpoints within the extension (say on the Initialize method). Then use the Debug > Attach to Process command within Visual Studio. Scroll through the processes until you find where your PowerShell script is running. I generally use the Windows PowerShell ISE to edit my scripts and that shows up as powershell_ise.exe. Choose it then click Attach:

Now when you run your script, your breakpoints should be hit. Fun Note, you’ll need to close and open the powershell window in order to release the dll when you want to make adjustments and build it.

Now you’re ready to take advantage of this incredibly powerful extension point! You can find the full code for this sample extension here. Have fun!